Inside Cursor’s Network Brain: How AI Agents, HTTP/2, and SSE Make the IDE Feel Alive

Imagine an IDE that doesn’t just respond but collaborates—one that feels as if it anticipates your next move. That’s precisely what the team behind Cursor set out to build: an AI-powered development environment where the line between human input and machine intelligence disappears into seamless flow.

Behind that effortless experience lies one of the most complex engineering challenges in modern software design—building a responsive, adaptive network stack capable of supporting real-time communication between AI agents running inside the IDE and those operating in the cloud. Every prompt, completion, and code transformation depends on a continuous conversation between these agents, carried out over protocols like HTTP/2, Server-Sent Events (SSE), and gRPC.

In this post, we will explore the complex nuances of this system. You’ll see how Cursor’s engineers designed a dynamic network stack that automatically adapts to unpredictable environments—from open home Wi-Fi connections to locked-down enterprise networks filled with proxies and certificate filters

For the technical reader, we’ll explore the fine details of how HTTP/2 streaming and SSE enable the IDE to maintain near real-time communication, even when conditions change. For the non-technical reader, this post offers a clear window into how these invisible technologies and AI agents work together to make Cursor feel quick, reliable, and almost alive.

The World Is Complex

We are focusing on two interconnected complexities when building an IDE like Cursor: network complexity and installation machine complexity.

Each adds layers of unpredictability and friction that the engineering team must handle with precision.

The Hidden Complexity Beneath Every Installation

Every developer knows this truth: no two machines are ever truly the same. Each one is its own universe—unique combinations of operating systems, security policies, proxies, and antivirus tools quietly shaping how software behaves. What seems like a routine installation is, in reality, a dance with chaos.

For the Cursor team, this complexity wasn’t theoretical. The Cursor IDE must constantly interact with cloud services—authenticating, streaming, and exchanging data in real time. Nevertheless, the local environment where it runs often decides whether that connection thrives or fails. Firewalls block essential ports. Expired certificates break trust chains. Outdated system libraries silently interfere with encrypted communication. And nowhere is such an undertaking more challenging than inside a corporate network.

In many enterprise environments, every outbound request must pass through a proxy server. These proxies inspect, decrypt, and re-encrypt traffic to enforce security and compliance rules. This results in a complex system where even a basic handshake between the IDE and its cloud backend may fail due to factors beyond the developer's control.

Cursor’s engineers quickly realized that surviving in this ecosystem required more than just robust code—it required resilience by design. One static connection strategy wasn't enough. They built a flexible networking stack capable of detecting its environment and dynamically adapting. When conditions are ideal—such as a personal laptop at home on a direct internet connection—the system chooses the most performant path. But when it detects a constrained corporate network, it automatically falls back to alternative communication methods that trade speed for reliability.

This adaptive model, layered atop the VS Code foundation on which Cursor is built, ensures that every user—from the developer coding at home to the engineer deep inside a Fortune 500 network—experiences a stable connection.

What looks simple on the surface is, in truth, an elegant orchestration of complexity. The team didn’t just build an installation process; they built a system capable of thriving in the unpredictable environments of the real world. world. And that ability—to adapt, recover, and keep working—is what transforms reliable software into trusted software.

Cursor AI IDE

If you know the HTTP protocol, read on; if not, read the primer section to learn about the protocols and technologies used in the Cursor AI IDE.

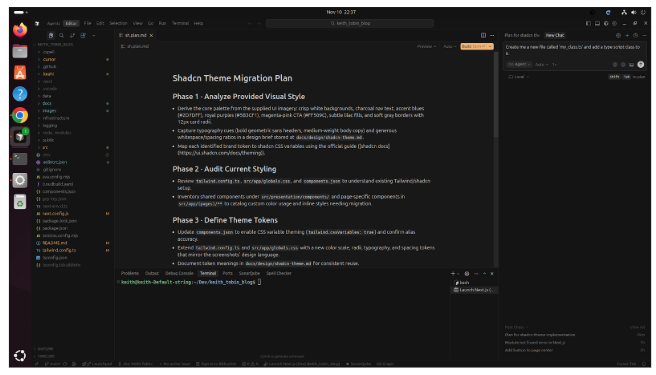

The Cursor AI IDE is an Electron application, a fork of the Microsoft VSCode project. VSCode is a project that defines open source, and VSCode has become a widely used IDE for developing software. Its success lies in its support and ongoing development by Microsoft and the supporting community. VSCode is a tool chest of amazing extensions that you can add, mostly free of charge and also often open source, that extend the capabilities of VSCode to be a complete workhorse for software development.

The Cursor Team has incorporated their unique approach into the GitHub source for VS Code, transforming it into a commercial product. They enhanced the product by adding the AI features to the core VSCode. The main window you interact with is where you enter a prompt to ask the Cursor AI IDE agent to carry out a task. This task could be, for example, ‘Create me a new class file called my_class.ts and then create a TypeScript class using the same name as the file.’

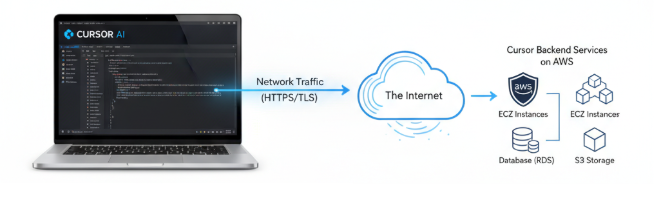

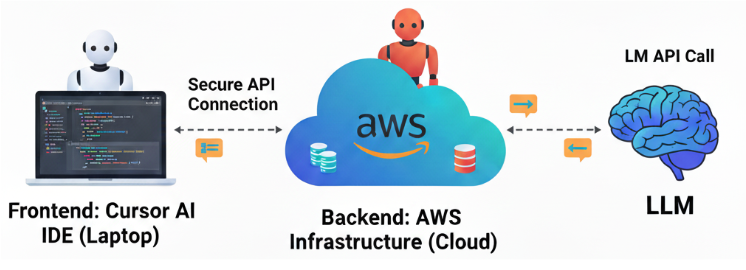

The Cursor AI IDE connects to its remote services, hosted in AWS datacenters, via the internet. It primarily uses the gRPC protocol layered over HTTPS.

This approach is highly effective because it leverages the standard internet protocols (HTTPS/HTTP) commonly permitted by corporate networks. The communication also incorporates Server-Sent Events (SSE).

The exact networking behavior and details—specifically regarding the underlying HTTP/1.1 or HTTP/2 protocol and any corporate network restrictions—will be discussed in subsequent sections.

Connectivity

The Cursor AI IDE uses HTTP(s) to connect with the Cursor backend service, as previously outlined. This is a secure connection where the content is encrypted; today, on most operating systems, this will be TLS1.3. TLS 1.3 provides end-to-end encryption with man-in-the-middle prevention. But the authentication method will depend on the negotiation between the operating system and the Cursor services in AWS. During the negotiation of TLS between the client operating system and the AWS infrastructure, it is possible for the connection to revert to using TLS 1.2 in a corporate environment, as both the client and server must agree on the protocol version.

AI Agents

The following may not be 100% entirely accurate, but it is conceptualized based on what we see in the messaging between the Cursor AI IDE and the backend services running in AWS. It will help us understand the messaging, opaque code, and how it could be implemented in software.

Cursor AI IDE Agent

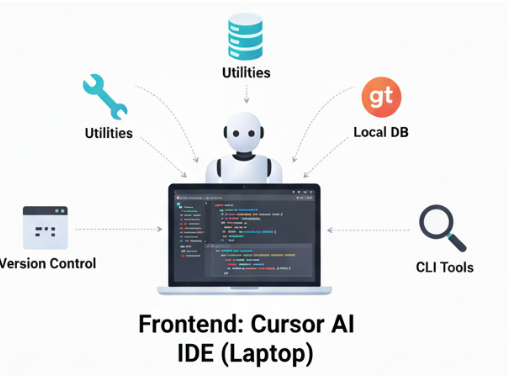

This agent lives inside the Cursor AI IDE software running on your laptop, and you can see the Cursor team refer to the logical construct as an agent in the IDE, so it helps to conceptualize it this way as well. This agent is responsible for speaking with the backend agent running in the Cursor backend services on AWS. What makes this concept intriguing is that this agent performs bidirectional conversation with a manager agent running on the Cursor backend in AWS, sending and listening for messages during a task cycle. This way, the Cursor AI IDE agent can carry out tasks using the available tools on behalf of the agent running on the backend.

You can consider this agent to be the worker agent, responsible for receiving instruction from the manager, using available tools to carry out tasks, and reporting back to the manager agent with information about task completion. This agent, which runs in the Cursor AI IDE on your laptop, has access to hundreds of tools for performing tasks within the IDE, the operating system, and through event calls, MCP, and CLI. The agent can even write code to carry out tasks where there is not existing tooling for the task, but under the instruction of the manager agent. It is also worth noting there may be dozens of these types of worker agents all responsible for carrying out tasks; it's not limited to a single agent.

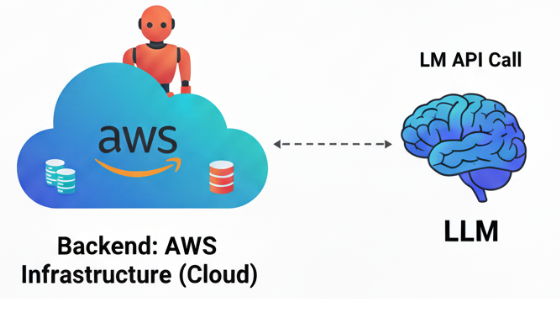

Cursor AI IDE Backend Service Agent

The manager agent runs on the Cursor AI backend and is the brains for establishing what the user wants to do and planning how to achieve it using the Cursor AI IDE and its available tools. The management agent will use the backend LLM form for understanding, planning, etc. This agent's goal, after understanding the user request and planning how to achieve it, is to send a request to the worker agent asking them to use the tools at their disposal to carry out tasks, report the results, and complete the overall user request.

Agent HTTP Connectivity

The two AI agents require bidirectional messaging, which presents a challenge: ensuring a responsive user experience while allowing the manager agent to request multiple tasks from the Cursor AI IDE agent. This is where the use of either HTTP/1.1 SSE or HTTP/2 streaming fits. HTTP/2 is newer, supports true bidirectional messaging, enables near real-time communication, and provides the best user experience. But in some corporate environments, firewalls and proxies can all limit this agility to being able to use HTTP/2 streaming. This phase is where the use of the Cursor AI IDE's proprietary HTTP fallback happens.

Agent HTTP Fallback

When the Cursor AI IDE agent running on your laptop attempts to establish a connection to the Cursor AI IDE backend agent, it uses Cursor’s proprietary HTTP fallback process. Once a secure TLS connection is established with the host operating system, the software determines which version of the HTTP protocol works best for the current network conditions.

The connection process begins when the Cursor AI IDE agent initiates an HTTP/2 streaming request to its manager agent hosted on AWS. HTTP/2 provides full-duplex streaming, enabling high-performance, bidirectional communication between the Cursor AI IDE and its backend services. This setup allows the Cursor AI IDE to receive and send messages efficiently, keeping the interaction smooth and responsive.

The Cursor AI IDE agent automatically switches to HTTP/1.1 using Server-Sent Events (SSE) if the HTTP/2 streaming request fails or the network does not support it. This fallback combination replicates the streaming behavior. This method is achieved through HTTP/2, which allows the backend to continuously send event-based updates to the Cursor AI IDE. These updates contain instructions that guide the IDE to perform operations and maintain an interactive experience even without native HTTP/2 support.

This fallback mechanism is designed to be fully transparent to the AI agent at the communication layer. From the agent’s perspective, it continues to operate over a reliable, bidirectional message channel regardless of the underlying protocol. By prioritizing the most efficient connection first and seamlessly falling back when necessary, the Cursor AI IDE ensures both performance and reliability, keeping developers connected and productive across varied network environments.

Agent Workflow

The Cursor AI system functions through a structured and intelligent workflow that enables real-time collaboration between two main agents:

The manager agent is hosted on the Cursor backend (AWS).

The worker agent operates within the Cursor AI IDE, which is located in the user’s local environment.

Together, they enable seamless communication, task execution, and adaptive replanning to achieve user goals efficiently.

Visual Overview of the Workflow

.jpg)

Figure: Sequence diagram illustrating the bidirectional message flow between the Cursor AI IDE (worker agent) and backend services (manager agent).

This diagram represents the entire agent communication cycle—from the moment a user submits a prompt to when the final result is displayed in the IDE. Each color-coded line indicates a distinct stage of interaction between the user, Cursor IDE, AI Service, Tool Service, and Diff Service.

Step-by-Step Breakdown

1. User Initiation

The workflow begins when a user clicks “Ask AI” or submits a prompt through the Cursor IDE.

This triggers the worker agent to send a POST request to the AI Service endpoint.

This starts a Server-Sent Events (SSE) stream, allowing real-time streaming of AI responses.

2. AI Service Planning

The AI Service (manager agent) processes the initial prompt and formulates a high-level plan using a Large Language Model (LLM).

It identifies the tools required to fulfill the task and sends tool call instructions back through the stream to the worker agent.

3. Tool Execution

Upon receiving the tool call, the worker agent communicates with the service via POST/SubmitToolCallEvents.

This service executes specific actions such as file creation, code generation, or analysis.

The status initially appears as "Pending" until the operation completes successfully.

4. Result Acknowledgment

As the tool completes its work, the Tool Service sends an update (Status: Success), which the worker agent then forwards back to the AI Service.

The system maintains this bidirectional message loop, with both agents exchanging continuous updates until the full task is complete.

5. File Updates and Diff Management

The worker agent submits the results of tool operations (e.g., code differences, modifications) via POST/BidAppend.

The Diff Service then tracks and logs these changes, ensuring every modification is recorded and versioned.

Each successful update is acknowledged through a BidAppend acknowledgment message, confirming synchronization across all services.

6. Validation and Error Checking

Once the main work is done, the manager agent instructs the worker to verify outputs—such as checking for file build errors or inconsistencies.

If issues are detected, the manager agent replans dynamically, issuing new tool instructions until the task achieves a successful end state.

7. Completion and Display

After all subtasks are completed and validated, the final response is streamed back to the Cursor IDE, which displays the output, result, or code completion to the user.

This marks the end of the agent workflow cycle for that task.

Summary

The Agent Workflow is an elegant orchestration of AI-driven decision-making and real-time task execution:

The user initiates a task.

The manager agent (AI Service) plans and coordinates.

The worker agent executes using IDE tools.

Tool and Diff Services track and validate actions.

Continuous feedback enables replanning and refinement.

The final result is delivered seamlessly back to the user.

This continuous loop of planning, execution, feedback, and replanning ensures the Cursor AI system behaves as a dynamic, adaptive, and reliable collaborative environment—blending the intelligence of the cloud-based manager agent with the precision of the local worker agent.

This diagram captures the complete **communication cycle**—from when a user submits a prompt to when the final result appears in the IDE. The interactions between the **user**, **Cursor IDE**, **AI Service**, **Tool Service**, and **Diff Service** are depicted as a series of calls and responses that keep the workflow moving.

ired and sending the next work tasks to the worker in the revised plan

Primer: Understanding HTTP/1.1, HTTP/2, SSE, Streaming, and gRPC

This section is optional for readers who already understand the fundamentals of web communication protocols. For everyone else, this primer will help you grasp the key technologies that power the networking. These key technologies power the networking stack of the Cursor AI IDE. Understanding these will make it networking. Cursor achieves its seamless, low-latency, and secure communication between your local machine and the cloud.

What Is HTTP?

At its core, HTTP—the Hypertext Transfer Protocol—is the web’s primary language. A client (your Cursor IDE) sends a request to a server (Cursor’s cloud backend) and waits for a response. Each of these interactions is discrete—you send a request, the server responds, and the connection ends.

In practical terms, when you invoke an AI completion in Cursor, your IDE sends something like, “What are my next lines of code?” The backend processes it and returns the suggestion. Under HTTP/1.1, that means initiating a connection, sending headers and a body, waiting, getting the reply, and closing. That overhead means latency.

HTTP/1.1 improved things by enabling persistent connections, where multiple requests could share a single connection. But fundamentally, the communication is client-driven: the client asks, and the server responds. If the server has something new (say, a background diagnostic result) that the client didn’t ask for, the model needs another request. For Cursor, where every keystroke might trigger cloud inference, that model becomes a bottleneck.

Example agent, and type `for (int i = 0;replanning++) { … }` in Cursor. The IDE sends a request to the AI service for suggestions. It gets them. But if you start typing a few more characters, another request is required. Each request has latency and overhead, making the experience feel choppy rather than fluid.

Extra Reference: Hyper Text Transfer Protocol Crash Course – HTTP 1.0/1.1/2

HTTP vs HTTPS

When we run HTTP over TLS (Transport Layer Security), we obtain HTTPS. This encryption layer ensures data confidentiality and integrity and guarantees that the service you’re referring to is actually what you expect. HTTPS is essential for the Cursor IDE, which handles source code, proprietary logic, and private data.

Example in Cursor: Your Cursor IDE communicates with an AI model backend. If someone intercepts that traffic, they might see your code context, suggestions, or even proprietary snippets. HTTPS protects that. But the real world complicates things: corporate environments often use proxies that inspect traffic, maybe replace certificates, or use self-signed certificates. That interrupts the normal “server certificate matches trusted root” flow. Cursor’s engineers wrote logic to detect certificate issues, proxy interruptions, and fallback paths and still keep the user connected securely.

Extra Reference: TLS Handshake Explained – Computerphile

Certificates and Trust

Certificates are digital credentials that assert, “This server is who it says it is.” They’re signed by a Certificate Authority (CA) that your machine trusts. The chain of trust goes something like this: root CA → intermediate CA → server certificate. If any link fails (expired certificate, unknown CA, mismatched chain), the authentication fails.

In practice, Cursor must handle a developer behind a corporate proxy that presents a self-signed cert, machines with outdated root CA lists, and OS-specific quirks in certificate validation. Cursor’s networking stack includes logical checks such as “Is this a corporate-signed proxy? If yes, trust it under these conditions; if no, fail safely.”

Example: A developer starts up Cursor in a company network. The firewall intercepts and re-signs all TLS traffic. A naïve client would reject the connection (certificate mismatch). The cursor identifies the role of the proxy’s certificate, verifies the policy, and either permits a fallback channel or displays an actionable error instead of resulting in a silent failure.

Extra Reference: SSL/TLS Explained in 7 Minutes

TLS—The Foundation Model for Secure Communication

TLS, or Transport Layer Security, is the cryptographic protocol that underpins HTTPS. It establishes an encrypted tunnel between client and server—handshake, key exchange, cipher negotiation, then encrypted communication. The handshake establishes trust and provides a key for secure communication.

Cursor requires the security of every connection, including AI services, telemetry, diagnostics, and updates. The engineering team created a system to automatically renew certificates, detect when weaker encryption methods are used, manage situations where proxies interfere with TLS, and ensure the system works even if operating system libraries are missing or outdated.

**Example:** The developer uses Cursor on an older Windows machine. The OS root CA store is missing a recently added CA. The TLS handshake fails. The cursor detects the failed handshake and alerts the user with “Your root certificate store may be missing entries; here’s how to resolve it or choose an alternate path,” rather than simply crashing with “Connection failed.”

Extra Reference: What is TLS? (Transport Layer Security)

What Are Server-Sent Events (SSE)?

Server-Sent Events (SSE) allow the server to push updates down a single long-lived HTTP connection unidirectionally (server → client). The client opens an event stream (via EventSource in web browsers or the equivalent in native clients), and the server writes text-based messages when things change.

In the Cursor IDE context, this approach is a natural fit: As you type, the AI backend may generate suggestions, streaming diagnostics, or real-time linting results. Instead of the IDE repeatedly polling the server, which Cursor uses SSE, either by wastefully opening or tearing down connections or by waiting idle. Cursor uses SSE, so the backend supplies updates immediately as they become ready.

Example: Typing enters a large function in Cursor. The backend’s static-analysis module locates a potential null-pointer issue halfway through. With SSE, the IDE can display the warning the moment it’s detected. Without SSE, Cursor might have to poll every few seconds, introducing noticeable delay.

Extra References:

- Server-Sent Events Crash Course

- Server-Sent Events (SSE) vs. WebSocket – Live Tutorial in NodeJS

Streaming with HTTP/2

In addition to the network, the laptop or desktop on which the Cursor IDE is installed adds another layer of complexity, ranging from relaxed personal home systems for locked-down environments. This feature allows messages to be sent without waiting for one request to finish before sending another.

For Cursor, the change means multiple real-time operations can share the same connection rather than opening dozens of separate ones or serializing them. For instance, while the AI-completion service streams code suggestions, simultaneously the diagnostics service streams lint warnings, and the telemetry service uploads usage data—all multiplexed over one HTTP/2 link. Result: lower overhead, less latency, smoother experience.

Example: Imagine your Cursor IDE triggers three tasks—AI code completion, real-time syntax checking, and background repository sync. With HTTP/2, instead of having three separate connections or queuing issues, all results arrive faster and in parallel.

Extra Reference: How HTTP/2 Works, Performance, Pros & Cons

What Is gRPC, and Why Does It Matter?

gRPC is a modern Remote Procedure Call (RPC) framework built on HTTP/2. It uses Protocol Buffers (binary formats) for messages and supports bidirectional streaming, unary calls, and server or client streaming. It’s designed for high-performance, low-latency communication between services or between client and backend.

In the Cursor ecosystem, gRPC is used for the fastest connections—when the IDE and AI backend need to quickly share detailed data or when both use HTTP/2 to send messages at the same time. Because gRPC uses HTTP/2 underneath, you get multiplexing, binary efficiency, and streaming support.

Example: Cursor sends a “code context” proto message to the AI service. The service responds with a streaming “suggestion” proto message: part of the response arrives, the IDE renders it, the next part arrives, and the IDE updates live. That bidirectional stream wouldn’t be simple under plain HTTP. With gRPC, you can have a client stream, a server stream, or a full duplex. For instance, the IDE might stream token by token as the user types, and the backend streams suggestions back simultaneously.

**Extra References:**

- gRPC Tutorial [Part 1] – Basics & Protocol Buffers

The Cursor AI IDE Network Stack

Before we start, if you are new to the HTTP network stack and associated technologies, then I recommend reading the Primer section of this document first; it will set you up to understand the core technologies used by the Cursor Team when implementing and designing both the Cursor AI IDE and the backend services.

The Cursor AI IDE network stack architecture has some careful design decisions; the Cursor AI IDE could have gone with a simple stack and lived with the limitations. This was the case; the Cursor AI IDE team has implemented a dynamic HTTP network stack that is focused on providing the best overall experience for the user. While tackling challenges that come from using the Cursor AI IDE in a deeply locked-down network environment, like we see in large enterprises.